Details

Model Selection Assistant

This project explored how UX design and explainable AI could better support language analysts as their workflows expanded from relying on a single default model to navigating multiple available models. Through primary research we discovered as analysts options increased, they struggled to understand tradeoffs, build trust, and make confident decisions due to limited explanation and comparison support. A research-driven, layered model comparison experience structured insights by depth, enabling quick scanning, meaningful comparison, and deeper exploration only when needed. The result was a scalable interaction approach that reduced cognitive load while aligning with real analyst workflows.

Client

Laboratory of Analytical Sciences

Year

Jan-Dec 2025

Role

UX Design

Team

2 PI's and 3 Research Assistants

A research-driven approach to supporting analyst decision-making

This project followed an iterative design thinking process that balanced human-centered research with the technical complexity of explainable AI systems. The process emphasized understanding analyst workflows, decision pressures, and trust-building behaviors before moving into solution design.

Each phase built intentionally on the last. Early research focused on understanding how analysts currently work and how future access to multiple STT models could change their decision-making. Insights from this phase informed clear problem framing and prioritization, which then guided concept exploration, prototyping, and testing. Throughout the process, designs were continuously refined to reduce cognitive load, surface meaningful signals, and support confidence without oversimplifying complexity.

The result was a layered, explainable interface concept shaped through research, iteration, and feedback designed to adapt to varying analyst needs while remaining transparent, flexible, and grounded in real-world use cases.

Uncovering analysts workflows and constraints

To inform the design, we conducted primary and secondary research across three areas: analyst workflows, existing speech-to-text tools, and explainable AI within human–AI collaboration. Through literature reviews and small interviews, we were able to understand how analysts make decisions today, where current STT systems fall short, and how explanation plays a role in building confidence and accountability when working with AI-driven recommendations.

Insights from the research showed that many tools prioritize performance metrics and outputs, offering limited support for understanding why a model is recommended for a given audio scenario. Analysts currently have little agency using one default STT model and its takes a lot of time to pull insights from audio with limited tools. Research in explainable AI emphasized that trust is not built through accuracy alone, but through reasoning that users can access, question, and stand behind, especially in high-stakes analytical work.

Breaking down analysts workflows to understand pain points

Through persona development and user journey mapping, we were able to understand that analysts had many pain points with only being able to use one default model for their workflow. Through UX patterns in their current system, some available tools were not used, and many had to do manual work, causing extra time and cognitive overload. This revealed many opportunities where our speculative system could provide adaptivity and start calibrating trust for a diverse range of users.

Layered explanations explore trust, transparency, and adaptability

One of the most important insights from this research was the value of layered explanations framework from SAP, for explainable AI. Instead of presenting all system logic at once, explanations can be structured to allow analysts to progressively access deeper levels of detail about a recommendation.

In this framework, high-level signals communicate what the system recommends, additional context explains why a model is appropriate for a specific audio scenario, and deeper layers reveal how the system arrived at that recommendation. This approach supports both quick decision-making and deeper investigation, giving analysts control over how much information they engage with while maintaining transparency and trust.

This insight became a foundational principle for the rest of the project and directly informed how model recommendations, confidence signals, and interaction patterns were later designed.

When choices increases, confidence can decrease

Synthesizing the analyst persona and end-to-end journey helped clarify where friction, uncertainty, and decision pressure occur when working with speech-to-text systems. While access to multiple STT models introduces flexibility, it also raises new questions around trust, usability, and interpretation, especially for analysts who are not machine learning experts.

To focus the design space and guide exploration, we reframed these insights into a set of How Might We questions that capture the core challenges of model selection, explainability, and analyst confidence.

Low-fidelity concepts grounded in research

After conducting multiple sessions of ideation methodologies, we chose three low-fidelity concept directions to understand how multiple STT models and explanations could be introduced within an existing audio playback system. All concepts were intentionally designed on top of a provided screen from the current analyst workflow tool, allowing us to explore new interactions while staying grounded in a familiar interface. These early concepts helped us evaluate tradeoffs between clarity, depth, and cognitive load.

List-based model recommendations

When users are working with a model, the system automatically suggests alternative models based on performance and community usage data, presented in a concise list format. This approach focuses on quick comparison and supports progressively deeper explanation levels as users engage further.

Visual comparison

The system visually compares the current model with top alternatives across key performance metrics, allowing users to quickly identify strengths and weaknesses. This concept emphasizes pattern recognition and supports comparison without relying solely on text.

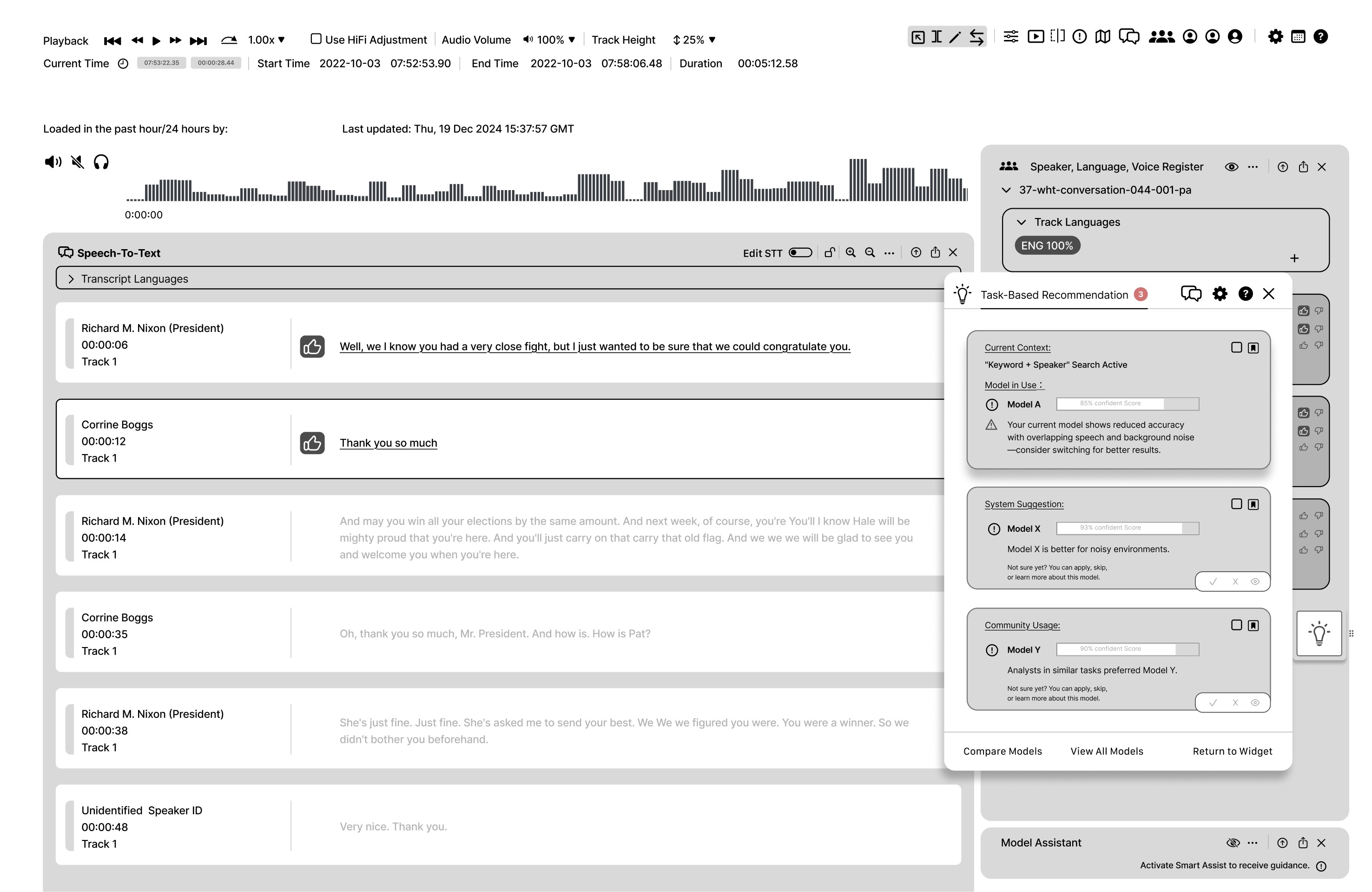

Task-focused expansion

Based on the user’s current activity, the system suggests not only better-suited models but also potential next tasks. This approach supports forward-thinking exploration beyond the user’s initial query.

Learning Through Feedback and Iteration

Feedback on the early concepts challenged several assumptions and helped clarify what truly supported analyst decision-making. I initially expected visual comparisons to be the most effective way to evaluate STT models, but stakeholders found these visualizations difficult to interpret and more cognitively demanding than anticipated. While informative, they required additional education and slowed quick decision-making.

In contrast, stakeholders responded strongly to the three-level explanation structure, noting its value in supporting analysts with different levels of expertise. The ability to choose how deeply to engage with a recommendation kept explanations glanceable while still allowing deeper investigation when needed. We also learned that analysts typically work with multiple tabs open rather than a single continuous flow, reinforcing the need to surface recommendations early within the broader workflow.

One of the most positive responses centered on community usage and peer-driven insights. Stakeholders saw this as a powerful way to build trust alongside the system, allowing analysts to learn from how others approached similar audio and model decisions. Rather than replacing individual judgment, community signals were viewed as a collaborative layer that supported confidence, sensemaking, and accountability. Based on this feedback, the list-based and task-focused concepts were favored over the visualization-heavy approach. Moving forward, we used the provided green screen layouts to place these ideas within a more complete system view.

Moving forward, we transitioned into mid-fidelity designs that aligned with the visual system and interaction patterns established by the provided green screens. This allowed us to move beyond isolated concepts and explore how model recommendations, explanations, and collaboration signals could function as part of a more complete system. At this stage, we explored two distinct directions that reflected different balances between automation and analyst control.

System-Initiated Recommendations

In this direction, the system detects key audio characteristics based on the selected audio cut and automatically generates a ranked list of STT model recommendations. Analysts are presented with glanceable Level 1 explanations that combine system recommendations and community usage signals to support quick decision-making.

To go deeper, analysts can preview a model’s transcript before fully committing, then access additional explanation layers once they enter the model view. This approach emphasizes system initiative and efficiency, supporting fast workflows while still allowing deeper validation when needed.

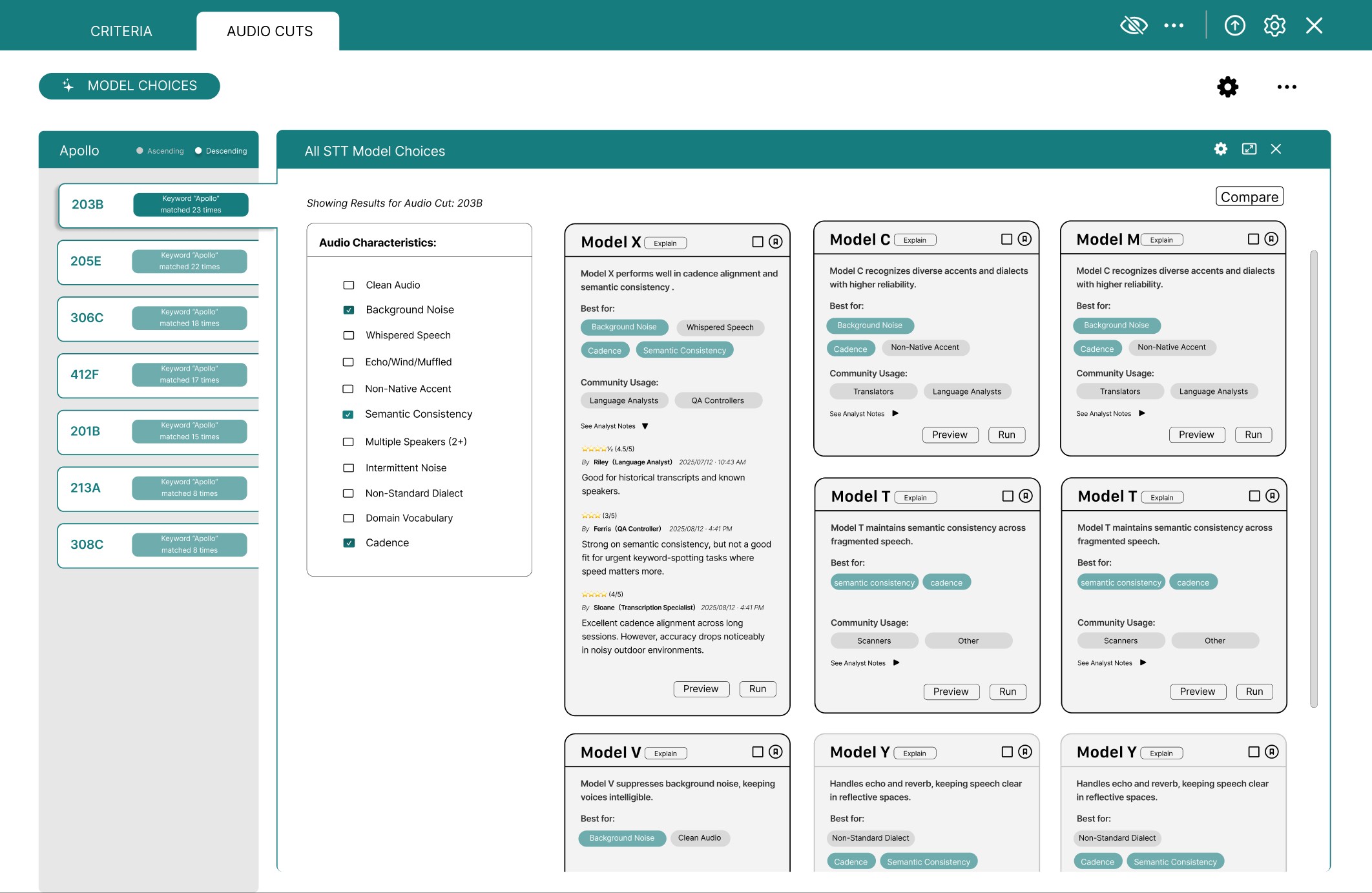

Analyst-Driven Model Selection

This direction emphasizes analyst agency by allowing users to explicitly select which audio characteristics best match their current audio selection. Based on these inputs, the system returns a tailored set of STT model recommendations presented as comparable model cards.

Community notes and analyst feedback are surfaced as trust signals alongside each recommendation, helping analysts validate system suggestions through real-world usage context. This approach supports more deliberate exploration and deeper understanding of model behavior while keeping explanations structured and accessible.

Understanding explanation preferences, trust calibration, and collaboration

To evaluate the two mid-fidelity directions, we conducted a survey with 28 participants spanning a range of analyst seniority levels and familiarity with AI systems. Participants included junior, intermediate, and senior analysts with varying exposure to STT and AI-driven tools. The goal of this study was to understand how analysts interpret STT model recommendations, which explanation strategies they rely on when making selections, and how trust is built across different levels of experience.

We then analyzed responses related to usability, confidence, trust, and likelihood of using the system in real workflows. We found no correlation between seniority level and an analyst’s ability to understand the system or make appropriate model selections. Instead, trust and confidence varied based on individual familiarity with AI. Across experience levels, participants consistently valued the three-level explanation structure, which supported both quick decision-making and deeper investigation when needed.

Open-ended responses revealed several strong themes. Analysts across all seniority levels emphasized the importance of community usage and peer-driven insights as trust signals, often viewing them as more influential than system-generated recommendations alone. Community feedback was seen as a way to validate decisions, learn from peers, and feel more confident standing behind model selections.

Participants also highlighted the importance of workflow efficiency and explanation clarity, including the ability to preview transcripts, jump to keywords, and better understand model limitations. Together, these insights reinforced the need for model selection tools that balance transparency, flexibility, and collaboration.

A layered, explainable system shaped by analyst feedback

Insights from the survey directly informed the final design direction. Findings confirmed that trust was not tied to seniority, but to how supported analysts felt in understanding and validating model recommendations. Analysts consistently valued adaptability, community insight, and the ability to control explanation depth, which reinforced the need for a layered system that could support both quick decisions and deeper investigation.

The final solution brings these insights together into a high-fidelity system that integrates seamlessly into the existing audio analysis workflow. Rather than forcing a single explanation style, the design supports progressive engagement, allowing analysts to move between glanceable recommendations, contextual reasoning, and technical detail based on their needs, confidence, and task complexity.

To bring the final system to life, we used a scenario-based approach that follows Riley, a fictional language analyst working at the Nixon Historical Audio Task Force. Riley is piloting a new suite of specialized speech-to-text models optimized for different audio conditions, and her task is to identify conversations related to the Apollo space missions. This context allows the system to be shown in both fast-moving analysis and deeper validation moments within a realistic workflow.

Level 1 — glanceable decision-making

Prioritizes plain-language, task-specific recommendations.

Level 2 — context and community insight

Synthesizes technical data with community input.

Level 3 — technical validation

Tailored for technical-oriented users requiring granular metrics (e.g., Word Error Rate, training data).

Layered explanations support trust, adaptability, and analyst growth

Trust is built through consistent, transparent interactions that adapt to an analyst’s needs over time. Layered explanations allow analysts to start with glanceable guidance and progressively explore deeper reasoning as their confidence and curiosity grow. By supporting this flexibility, the system reinforces analyst agency while also enabling learning and long-term trust calibration throughout the analyst’s journey.

Leveraging social intelligence to calibrate trust

Analysts have consistently shown they rely on peer knowledge to build confidence in complex decisions, especially when system recommendations alone feel abstract. Surfacing community usage, peer-validated performance, and task appropriateness adds a collaborative layer of trust that complements system reasoning rather than replacing it. By embedding social intelligence directly into the workflow, the system helps analysts calibrate trust through shared experience and collective insight.

Support adaptability by aligning with analyst workflows

The system is designed to align with existing analyst workflows by allowing a quick preview and comparison of model outputs before a full selection is made. This supports flexible exploration without disrupting momentum or forcing early commitment. By making comparisons glanceable and accessible, the system reinforces adaptability while helping analysts build confidence in their decisions.

This project demonstrates how expanding analyst tools from a single-model workflow to a multi-model decision system can significantly improve confidence, flexibility, and decision quality. By introducing layered explanations and community-driven trust signals, the final design reduces cognitive overhead while enabling analysts to make faster, more informed model selections. Analysts showed positive results in utilizing a system with layered explanations to help them make better model suggestion decisions. Within our documented process, managers & engineers will use our framework to help them design this future system.

On a personal level, being able to gain knowledge in a new domain and understand the complex information in order to provide useful and impactful design experiences really showed me how important the design thinking process truly is. Seeing how small design decisions could meaningfully influence trust, efficiency, and collaboration reshaped how I think about building AI-powered systems that are both responsible and operationally impactful.